Example project workflow videos

Quick start: download & check out the example project / session featuring a short 360 video of us running around at Audio Ease, mono panning audio, a true ambisonics recording, binaural preview and export suggestions.

Open the practice project START and open the workflow video and practice along with the video.

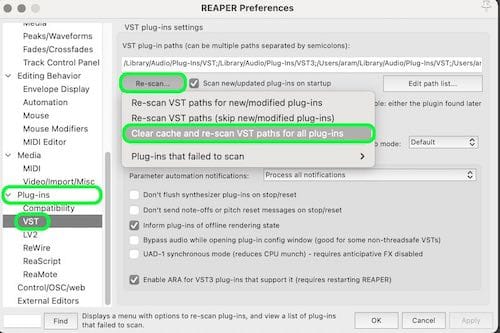

For Nuendo there is a practice project available too, however there is no walkthrough instruction video. Check the Reaper VST workflow video and download the practice project to give it a try:

practice project Nuendo

Two manuals

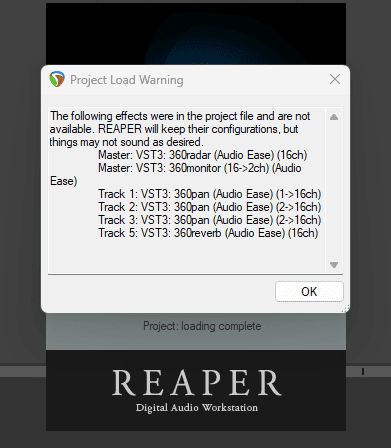

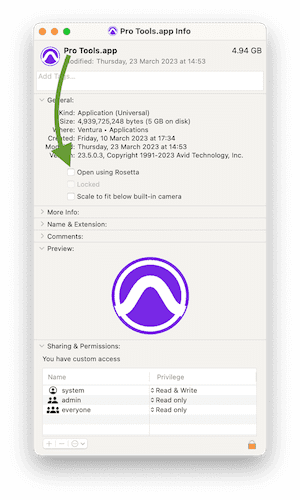

Yes, two 360pan suite manuals. One for VST3 and usage in Reaper and one for AAX for usage in Pro Tools HD/Ultimate.

DAW Templates

To kickstart your new VR/360 audio post project use one of our templates. All routing/bussing done for you already, import the audio and video and start mixing. First order (1OA), second order (2OA) and third order (3OA) templates are included.

Position blur

Mixing non-turning audio, like Voice Overs, using position blur in 360pan.

The first channel of the ambisonics stream is the W channel, which goes to all virtual speakers in the ambisonics to binaural converter. This means you can place non-turning mono audio there, great for voice overs.

Position blur does this. With position blur all the way open your panning is gone and the audio is mono an everywhere, with the control all the way down the panning is very precise and the audio is a point source. With the blur parameter you can make panning less hard and create clouds of audio. This way you can position stereo input, add blur and create a stereo sensation while the audio does not turn when a users turns around in the VR.

Export 360pan suite audio to YouTube

Follow these steps to upload 360 video with ambisonics audio to YouTube. So that the viewer gets interactive turning audio when looking around in the VR video.

Here's the official YouTube guide at:

https://support.google.com

Here's our recipe:

Mix your audio and place (pan) it using the 360pan suite.

Bounce the resulting quad audio to:

- an interleaved file and ask your video producer to add this to the video

- or bounce directly to the quicktime video, using Pro Tools HD/Ultimate

In both situations bounce as quad (four channel) interleaved wave audio file (not compressed).

Then download the Spatial Media Metadata Injector app

Open your video with the quad ambisonics audio in the Spatial Media Metadata Injector app.

Check the first and last box (Video is spherical and audio is spatial, ambiX)

Inject metadata, upload to YouTube, wait an hour for your 360 video to process.

Watch in a modern browser (Chrome works for sure, Firefox and Safari might work too) to verify you get spatial audio (look around with headphones on!).

Export audio for Facebook

Bounce or export a second order ambisonics AmbiX interleaved audio file. (this is the 9 channel output from the Audio Ease 360pan suite).

Use the FB360 encoder app. Under ‘spatial audio’ select your mix and set it to B-format (2nd order ambix). Do not check the 'From Pro Tools' checkbox.

Select your headlocked stereo output and your video and then encode to a Facebook 360 video.

Offline side load in Gear VR

through Samsung VR app

If you have a Samsung GEAR VR and a compatible Android phone you can check/preview your mixes locally on the HMD without uploading (side loading). Follow this guide to get audio that will enable an immersive experience.

There are two approaches, one using a ffmpeg droplet to merge video and audio:

- export four channel AmbiX audio

- combine this with your original video by adding the audio as 4 channel AAC, you can use one of the droplets for this

- inject meta data using Google's Spatial Media Meta Data Injector app

- copy the injected video to your Android device, by using SideSync app for instance. Add it to a folder on the root of your device called "MilkVR".

- put the Android device in the Gear VR, run the Samsung VR app from the default Occulus home

- in the Samsung VR app hit the folder for local files then look under side loading and your video should be there.

Or by using the Facebook encoder app:

- export four channel AmbiX audio

- open the fb360 encode app, set this to export for YouTube video

- drop your video and audio onto the app, set the audio format to first order AmbiX

- encode

- copy the encoded video to your Android device, by using the SideSync app for instance. Add it to a folder on the root of your device called "MilkVR".

- put the Android device in the Gear VR, run the Samsung VR app from the default Occulus home

- in the Samsung VR app hit the folder for local files then look under side loading and your video should be there.

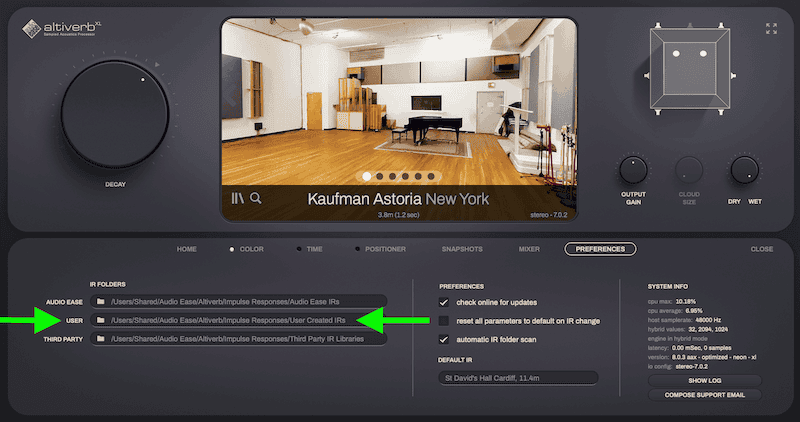

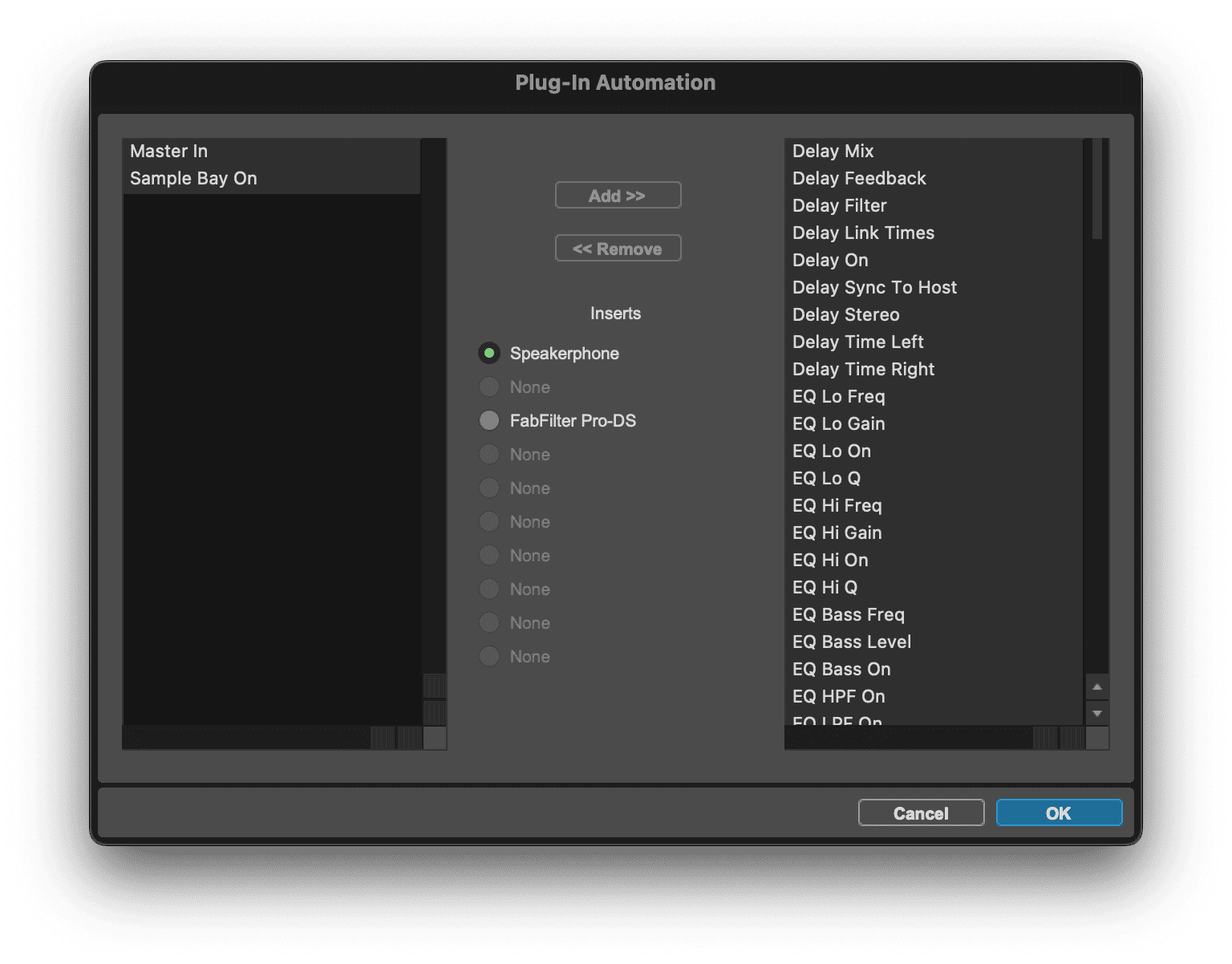

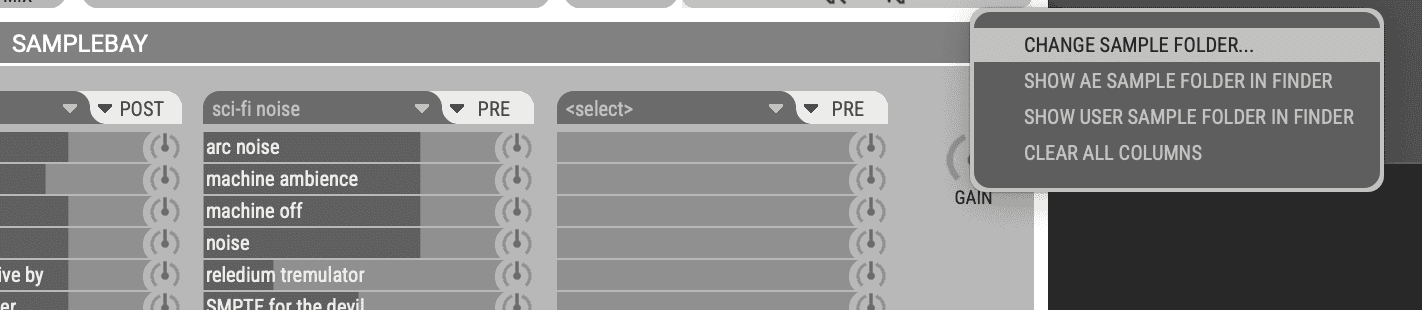

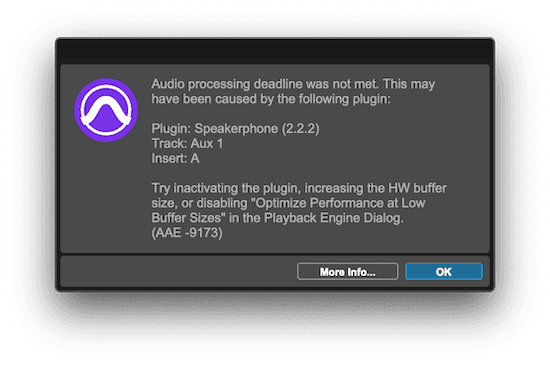

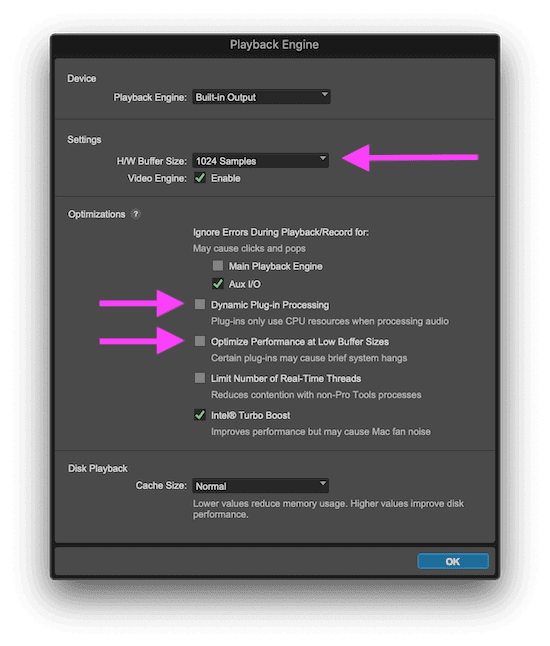

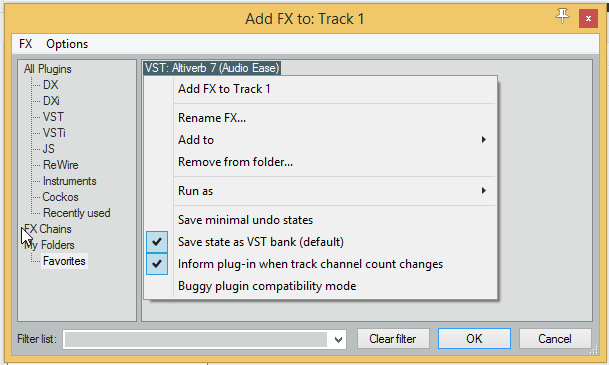

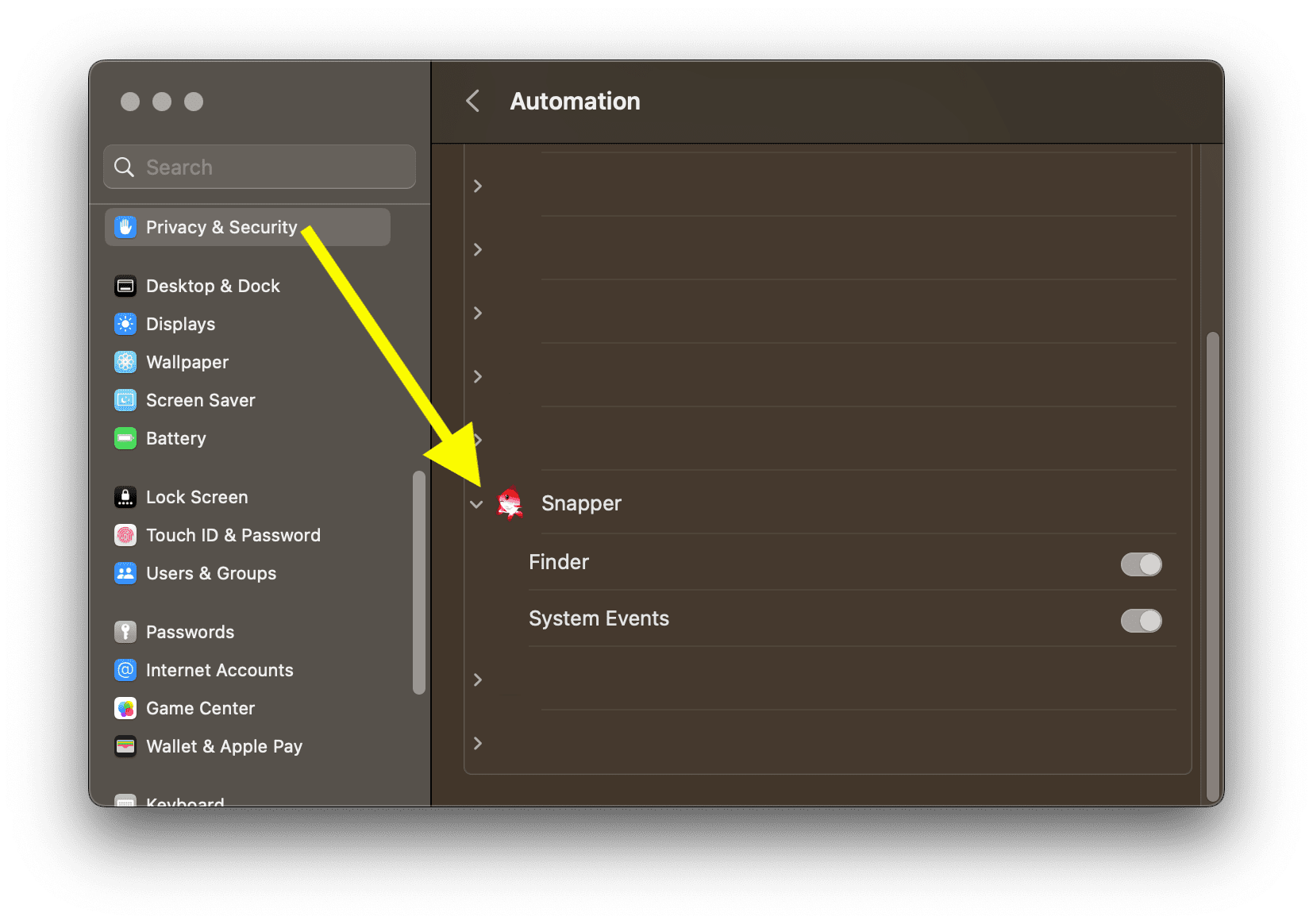

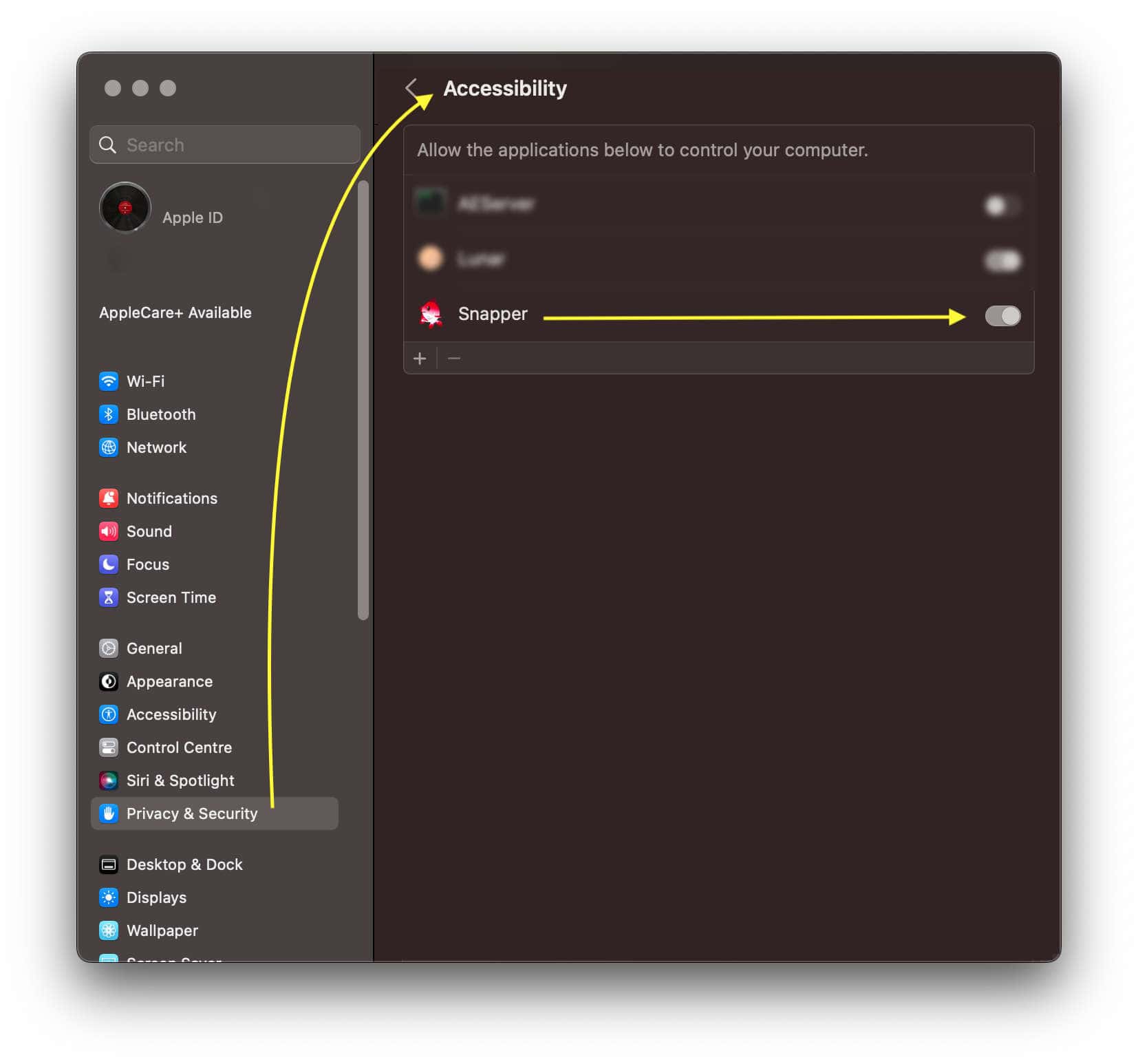

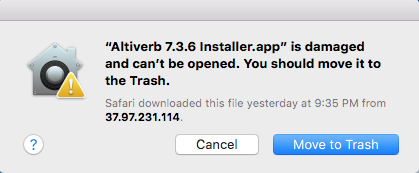

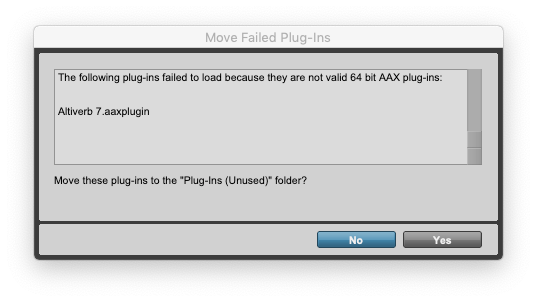

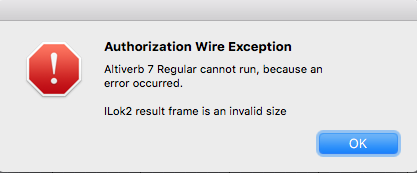

"Speakerphone / Altiverb cannot run, because an error occurred. Encrypted Channel Bad Channel ID (or USB error or frame is an invalid size)"

"Speakerphone / Altiverb cannot run, because an error occurred. Encrypted Channel Bad Channel ID (or USB error or frame is an invalid size)"